Cloud data integration allows organizations to improve their operations. It breaks down data silos, streamlines workflows, and ensures quick and accurate decision-making by providing high-quality data. But what does implementing cloud data integration actually entail? Let’s start with the key components of cloud data integration:

Data Sources and Endpoints

Data originates from different sources, including databases, enterprise applications, and cloud-based systems. Here, we’ll cover the identification of these sources and the mechanisms to securely connect and retrieve data from them.

Types of Data Sources

- Relational Databases: Structured databases like MySQL, PostgreSQL, and Oracle are common sources of transactional data.

- Enterprise Applications: Systems such as CRM (e.g., Salesforce) and ERP (e.g., SAP) often hold critical business data.

- Cloud Services: Cloud-based data repositories (e.g., AWS S3, Google Cloud Storage) and SaaS applications offer accessible data points.

- IoT Devices: Internet of Things devices generate large volumes of real-time data. This data is increasingly integrated into business analytics.

Connectivity Methods

- APIs: Application Programming Interfaces (e.g., REST, SOAP) enable secure communication between systems.

- ODBC/JDBC Connectors: Used for database connections, ensuring that data can be queried directly.

- Data Virtualization: Combines data from multiple sources virtually. This allows for integration without moving or duplicating data.

Security Considerations

- Secure data handling at endpoints is essential to prevent unauthorized access, with encryption, tokenization, and role-based access control being critical for secure integration.

Integration Platforms

Cloud Integration platforms are the software tools and frameworks that facilitate cloud-based data integration across environments, making it easier to process and analyze big data. They provide a centralized way to manage data flows, connect diverse systems, and enable scalability.

Popular platforms

- DCKAP Integrator: The DCKAP Integrator is the ERP integration platform for manufacturers and distributors. It makes systems talk to each other, including the ecommerce platforms, CRMs, PIM, EDI and more.

- MuleSoft: MuleSoft is known for its API-led connectivity. It allows integration across cloud, SaaS, and on-premise software.

- Informatica: Informatica is the leader in data governance and cloud data integration. It caters to industries with strict compliance needs.

Key Platform Features

- Pre-built Connectors: Platforms often include connectors for popular software like SAP, AWS, and Salesforce. This saves companies significant time and effort in setting up connections.

- Low-code/No-code interfaces: These interfaces democratize data integration. Thus, non-technical users can manage integrations via drag-and-drop features.

- Scalability and Flexibility: With multi-cloud support, cloud data integration platforms help organizations scale up as needed.

Criteria for Choosing a Good Platform

- Customization Capabilities: If specific workflows or data transformation steps are required, it’s crucial to select a platform with robust customization options.

- Compatibility: Evaluate if the platform offers connectors for current data sources and the flexibility to expand as systems evolve.

- Licensing and Costs: Platforms vary widely in cost. There’s everything from open-source solutions like Apache Nifi, and customizable solutions like DCKAP Integrator, to enterprise solutions like Informatica..

- Community and Support: Strong customer support and an active user community can be invaluable when deploying and troubleshooting integration processes.

Data Transformation and Processing

Data transformation prepares raw data for storage and analysis. This ensures it’s compatible, clean, and standardized. The two main processes that accomplish this are ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform). They handle data differently based on infrastructure and requirements.

ETL Process

In the ETL process, data is extracted from the source, transformed on a secondary server, and then loaded into the destination system. This approach is common in on-premises setup and smaller cloud environments. It remains popular for transactional processing, where data requires heavy transformation before analysis.

Data Mapping and Cleaning

Data Mapping ensures source data is compatible with destination schemas. This is critical in scenarios where data from multiple sources is combined. For example: merging customer data from CRM and e-commerce platforms.

Data Cleaning, just like it says on the tin, involves cleaning up the datasets. This means removing duplicates, correcting errors, and standardizing data formats to maintain quality. Automated tools detect anomalies, making data consistent and reliable.

Recommended read: Data Hygiene Best Practices For Distributors

Data Standardization

To ensure compatibility across systems, data is standardized. Standardization means that disparate data aligns with unified formats. This makes integration and analysis smoother and more accurate. It can be as simple as converting all date fields to a single format (e.g., YYYY-MM-DD) or as complex as restructuring entire data schemas to match the target system’s requirements.

Common standardization tasks include:

- Normalizing text fields: Setting text to uppercase or lowercase for consistency.

- Converting units of measurement: Transforming imperial units to metric.

- Harmonizing numerical formats: Ensuring consistent decimal usage across financial figures.

For organizations that handle data from international systems with varying conventions, this process also extends to adjusting for regional differences:

- Currency Conversion: Sales data from different countries may require conversion to a standard currency (e.g., USD or EUR) to enable accurate financial analysis.

- Time Zone Standardization: To compare time-based data accurately, timestamps may be converted to a single time zone, such as UTC.

- Language and Terminology Normalization: Translating product names or descriptions into a common language or format can ensure consistency, particularly in multilingual datasets.

Data standardization not only facilitates compatibility but also reduces errors and discrepancies in analysis. This allows for clearer insights and more reliable decision-making. A 2022 study by Accenture on compliance risk emphasized that standardized data practices play a critical role in mitigating operational and compliance risks. This is especially important in sectors like finance and healthcare where accuracy is crucial.

Data Enrichment

Data enrichment involves appending additional information to a dataset. It enhances its value by providing richer context for analysis. This process typically incorporates data from external sources or alternative internal sources. It makes the original data more informative and actionable.

Data enrichment can also include geographic or psychographic data. Adding geolocation details allows businesses to assess regional demand trends. This, in turn, allows them to target location-based marketing, and optimize inventory across regions.

For B2B applications, enrichment may involve incorporating firmographic data (industry, company size, revenue) into existing client information. This helps companies better segment their prospects by industry or enterprise scale. With enriched datasets, a B2B company could focus marketing efforts at sectors with a higher likelihood of conversion.

Enrichment enhances not only marketing but also risk assessment and operational efficiency. Financial institutions, for example, often enrich data with credit scores or fraud risk indicators. Logistic companies may incorporate weather and traffic data into delivery schedules. The combination of enriched internal data with pertinent external insights enables businesses to make better-informed, data-driven decisions across a range of functions.

Importance of Transformation

Data transformation is essential for converting raw, unstructured data into a format that’s ready for analysis and integration. It ensures data consistency, quality, and usability. All of those are critical for reliable insights and decision-making.

Key benefits of data transformation:

- Enhances Data Quality: By cleaning and normalizing data, transformation removes errors and inconsistencies. This reduces the risk of inaccurate insights.

- Supports Advanced Analytics: Structured data is foundational for analytics and AI. It provides the consistency and reliability needed for accurate modeling and predictions.

- Improves Decision-making: Transformation allows data to be interpreted faster and more accurately. This empowers businesses to make timely, data-driven decisions.

- Increases Operational Efficiency: Standardized and streamlined data reduces processing time. This enables faster data flows and enhances system responsiveness.

Types of Cloud Data Integration

Cloud data integration encompasses various approaches to suit different business needs and data environments. The three primary types—batch integration, real-time integration, and data virtualization—offer unique benefits and applications depending on the volume, velocity, and variability of data.

Batch Data Integration

Batch data integration involves collecting and processing data in groups or “batches” at scheduled intervals. This approach is best suited for scenarios where real-time updates are unnecessary and large volumes of data need to be consolidated efficiently.

- How It Works: Data from multiple sources is aggregated and transferred to a central system for processing and storage. These operations typically occur during non-peak hours to minimize system strain.

- Key Use Cases:

- Reporting and Analytics: Generating daily, weekly, or monthly reports based on consolidated data.

- Data Migration: Moving data from legacy systems to modern cloud environment in bulk.

- ETL Workflows: Extracting, transforming, and loading large datasets into cloud data warehouses.

- Advantages:

- Cost-effective for large data volumes

- Simplifies integration workflows for non-urgent data needs

- Challenges:

- Unsuitable for real-time updates or decision-making

- Higher latency between data collection and availability

Real-Time Data Integration

Real-time data integration focuses on delivering up-to-date information instantly as it is created or updated. This allows for faster decision-making and responsiveness.

- How It Works: Data flows continuously between different systems through streaming technologies or event-driven architectures. Systems like Apache Kafka or AWS Kinesis are commonly used to facilitate real-time data streaming.

- Key Use Cases:

- Operational Dashboards: Monitoring live metrics such as website traffic, sales, or inventory levels.

- Fraud Detection: Identifying and responding to suspicious transactions as they occur.

- Customer Experience: Updating user profiles in real-time to provide personalized recommendations or service.

- Advantages:

- Enables instant insights and actions

- Ideal for time-sensitive applications

- Challenges:

- More complex and resource-intensive than batch processing

- Requires robust infrastructure to handle high data velocity

Data Virtualization

Data virtualization offers a unified view of data from multiple sources without physically moving or replicating it. This approach is ideal for organizations needing real-time access to diverse datasets without the overhead of traditional integration methods.

- How It Works: A virtual data layer acts as an abstraction, allowing users to query and access data from disparate sources (e.g., databases, applications, cloud storage) in real time. Denodo or Cisco Data Virtualization are two common tools.

- Key Use Cases:

- Business Intelligence: Providing analysts with a single view of disparate datasets for quicker insights.

- Regulatory Compliance: Accessing data across systems for audit trails without disrupting operations.

- Hybrid Environments: Integrating on-premises and cloud data seamlessly.

- Advantages:

- Reduces latency by avoiding data replication

- Simplifies data management across diverse systems

- Challenges:

- May not handle high-volume data processing as efficiently as traditional methods

- Requires careful design to ensure performance and scalability

Benefits of Cloud Data Integration

Cloud data integration empowers organizations to unify disparate data sources, streamline operations, and unlock new opportunities for innovation. By centralizing data management and enhancing accessibility, integration offers a range of benefits that drive efficiency, agility, and informed decision-making.

Centralized Data Access

Cloud data integration brings together data from multiple sources—databases, applications, IoT devices, and cloud storage—into a single, unified view.

- Key Advantage: Different teams can access consistent and reliable data without switching between systems. This saves time and reduces errors.

- Example: Coca-Cola implemented the Consumer Data Service (CDS) 2.0 solution. By integrating data from various sources into a centralized cloud-based platform, company’s leadership gained real-time visibility into global performance metrics. This unified view enables more informed decision-making and enhances operational efficiency across the company’s international operations.

Enhanced Data Quality

Integration processes often include data cleansing and validation. This ensures the data used for analytics and decision-making is accurate and consistent.

- Key Advantage: Improves trust in the data and minimizes errors in reporting or analysis.

- Example: University of Pittsburgh Medical Center (UPMC) implemented an Enterprise Master Patient Index (EMPI) to integrate patient records across its various departments and facilities. This integration process involved data cleansing and validation. It effectively eliminated duplicate records. This ensured that each patient’s medical history was complete and accurate.

Real-Time Insights

With real-time data integration, businesses can access up-to-date information as it happens. This enables faster and more informed decisions.

- Key Advantage: Supports agility in dynamic environments, such as monitoring operational metrics or responding to market trends.

- Example: Uber’s business model relies heavily on real-time data to match riders with drivers, estimate arrival times, and adjust pricing dynamically. To achieve this, Uber developed a robust data infrastructure capable of processing and analyzing data streams from millions of users and drivers simultaneously.

Scalability and Flexibility

Cloud-based integration platforms scale effortlessly with growing data volumes and changing business needs. This way they support both batch and real-time processing.

- Key Advantage: Eliminates the need for costly hardware upgrades and adapts to fluctuating workloads.

- Example: Netflix uses Amazon’s Web Services (AWS) to manage its streaming protocols. By leveraging AWS’s scalable infrastructure, Netflix efficiently handles vast amounts of data. This includes viewership metrics from millions of users worldwide. Netflix can seamlessly manage peak traffic during major content releases without the need for hardware upgrades.

Cost Efficiency

By leveraging cloud infrastructure, organizations reduce costs associated with maintaining on-premises hardware and resources for data integration.

- Key Advantage: Pay-as-you-go models in cloud services ensure companies only pay for what they use. This makes integration cost-effective.

- Example: By migrating its data infrastructure to AWS, Airbnb eliminated the need for extensive on-premises hardware, reducing operational costs. This transition allowed Airbnb to integrate data from sources like customer interactions, bookings, and host information, into a unified platform. The pay-as-you-go model of AWS allowed Airbnb to scale its data integration efforts cost-effectively. It aligned expenses with actual usage and supported its rapid growth.

Better Collaboration

Unified and accessible data fosters collaboration across teams and departments. This breaks down silos and promotes a data-driven culture.

- Key Advantage: Enables cross-functional teams to work together seamlessly using shared, up-to-date information.

- Example: Nestlé’s partnership with Deloitte to develop a Microsoft Azure Data Lake that started in 2018 is a perfect example of better collaboration that can be achieved with cloud data integration. This centralized data repository dismantled information silos. It granted access to shared, up-to-date data to relevant roles. As a result, cross-functional teams could collaborate more effectively. They could leverage high-quality information to drive informed decision-making and innovation.

Regulatory Compliance

Integrated data systems simplify compliance by providing a clear, centralized view of data that meets regulatory requirements.

- Key Advantage: Makes audits and reporting more efficient, reducing the risk of penalties.

- Example: Varo Bank, along with Wolters Kluwer, managed multiple, sizable efforts in parallel to ensure the bank was ready in time for its clients. This helped the bank manage their obligations to regulators and address changing regulatory requirements and other stakeholders of the business.

Also see: The Best Cloud Integration Tools This Year

Challenges in Cloud Data Integration

While cloud data integration offers numerous advantages, it comes with its own set of challenges. These obstacles often stem from the complexity of managing diverse systems, ensuring data quality, and maintaining security in dynamic environments.

Complexity of Diverse Data Sources

Organizations often deal with a variety of data formats, structures, and protocols. This complicates the integration process.

- Key Issue: Standardizing and harmonizing data across diverse sources can be resource-intensive.

- Solution: Implementing a middleware or data integration platform that supports multiple formats and protocols.

Data Quality Issues

Poor data quality—such as duplicates, errors, or incomplete data—can undermine the success of integration efforts.

- Key Issue: Unreliable data leads to inaccurate analytics and flawed decision-making.

- Solution: Adopting automated data cleansing and validation tools. Incorporating machine learning models to detect anomalies and duplicates. Using master data management (MDM) platforms to maintain consistency.

Data Silos

Integrating data from isolated or incompatible systems can be a significant challenge. This is especially true for legacy platforms or proprietary software.

- Key Issue: Data silos restrict access and create inconsistencies across an organization.

- Solution: Implementing a data virtualization layer that provides a unified view without physically moving data. Encouraging cross-departmental collaboration by introducing shared data governance policies.

Monitoring and Maintenance

Once data integration workflows are established, ensuring their smooth operation and resolving issues can be an ongoing challenge.

- Key Issue: Integration pipelines require continuous monitoring to detect bottlenecks, errors, or performance issues.

- Example: Opting for tools like DCKAP Integrator with built-in observability features allow users to monitor data pipelines in real-time.

High Costs and Resource Requirements

Data integration, especially real-time or complex setups, can demand significant investments in tools, infrastructure, and expertise.

- Key Issue: Smaller organizations may find it difficult to balance costs with the expected benefits.

- Solution: Leveraging pay-as-you-go cloud platforms like AWS or Google Cloud, which allow cost control by scaling resources up or down based on actual usage. Optimizing costs through reserved instances or spot pricing.

Scalability Challenges

While cloud platforms are inherently scalable, poorly designed integration workflows may struggle to handle spikes in data volume or velocity.

- Key Issue: Inadequate scaling can lead to delays, failures, or increased costs.

- Solution: Designing integration workflows to be event-driven and use horizontally scalable architectures, such as microservices.

Best Practices for Cloud Data Integration

Implementing cloud data integration effectively requires a strategic approach to ensure data quality, security, and scalability. By following best practices, organizations can overcome common challenges and maximize the benefits of their integration efforts.

Define Clear Objectives

Before initiating any integration project, it’s essential to identify the specific goals and outcomes you aim to achieve.

What to Do: Outline the purpose of integration. This can include improving analytics, streamlining operations, or enhancing customer experience.

Ensure Data Quality from the Start

Data quality issues, if not addressed early, can compromise the success of the integration process.

What to Do: Implement validation, cleaning, and deduplication processes before transferring data to ensure accuracy.

Choose the Right Integration Platform

Selecting a platform that aligns with your business needs is critical for a smooth and efficient integration process.

What to Look For: Evaluate platforms for their compatibility with existing systems, scalability, industry expertise and ease of use (e.g., support for low-code/no-code tools).

Leverage Real-Time and Batch Processing as Needed

Choosing the right integration mode—real-time or batch—based on the use case ensures efficiency.

What to Do: Use real-time processing for dynamic, time-sensitive operations and batch processing for non-urgent, large-scale data transfers.

Invest in Scalability

Workflows and infrastructure should be designed with scalability in mind to handle growing data volumes and business needs.

What to Do: Opt for cloud platforms that can scale elastically and design workflows that can handle variable data loads.

Monitor and Optimize Continuously

Integration pipelines require ongoing maintenance and monitoring to ensure optimal performance.

What to Do: Set up automated alerts for bottlenecks or errors and regularly review workflows for improvements.

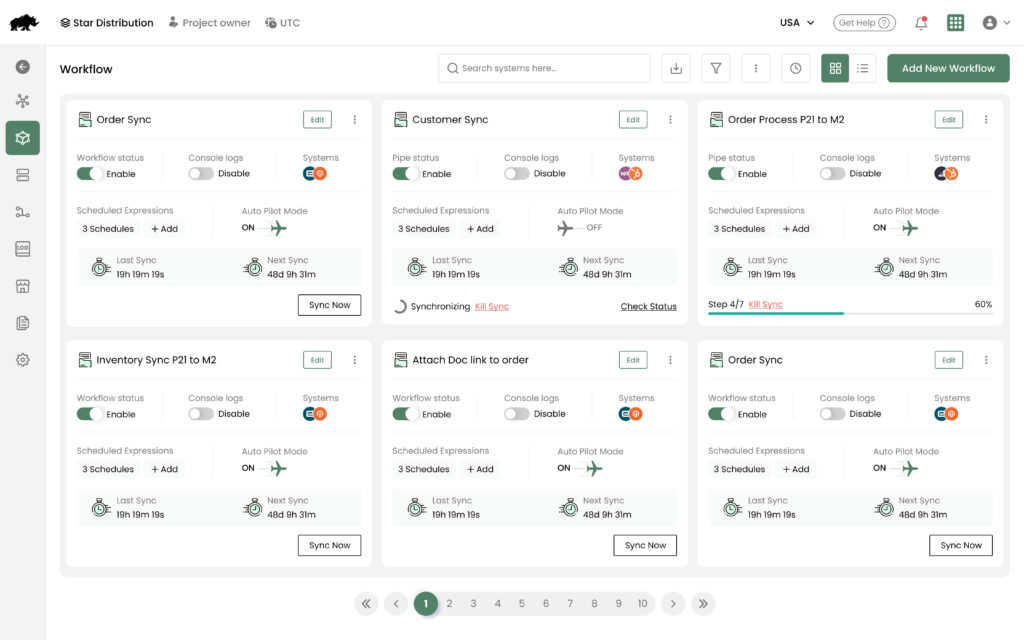

DCKAP Integrator: Cloud Data Integration for Manufacturers and Distributors

The DCKAP Integrator is a powerful solution tailored specifically for manufacturers and distributors. By simplifying complex tasks and ensuring seamless data flow between systems like ERPs, CRMs, and e-commerce platforms, the DCKAP Integrator empowers businesses to:

- Streamline Operations: Reduce inefficiencies and improve collaboration across departments.

- Enhance Data Accuracy: Eliminate silos and ensure consistent, reliable data for decision-making.

- Support Growth and Scalability: Easily adapt to evolving business needs and integrate new systems effortlessly.

With a focus on scalability, security, and ease of use, the DCKAP Integrator positions itself as a critical tool for companies looking to embrace digital transformation and stay competitive in their industries. For manufacturers and distributors, choosing the right integration platform can mean the difference between simply managing data and fully harnessing its potential to drive success.

Ready to transform your business? Schedule a demo with DCKAP’s integration experts today and see how seamless integration can unlock your full potential.

Contents